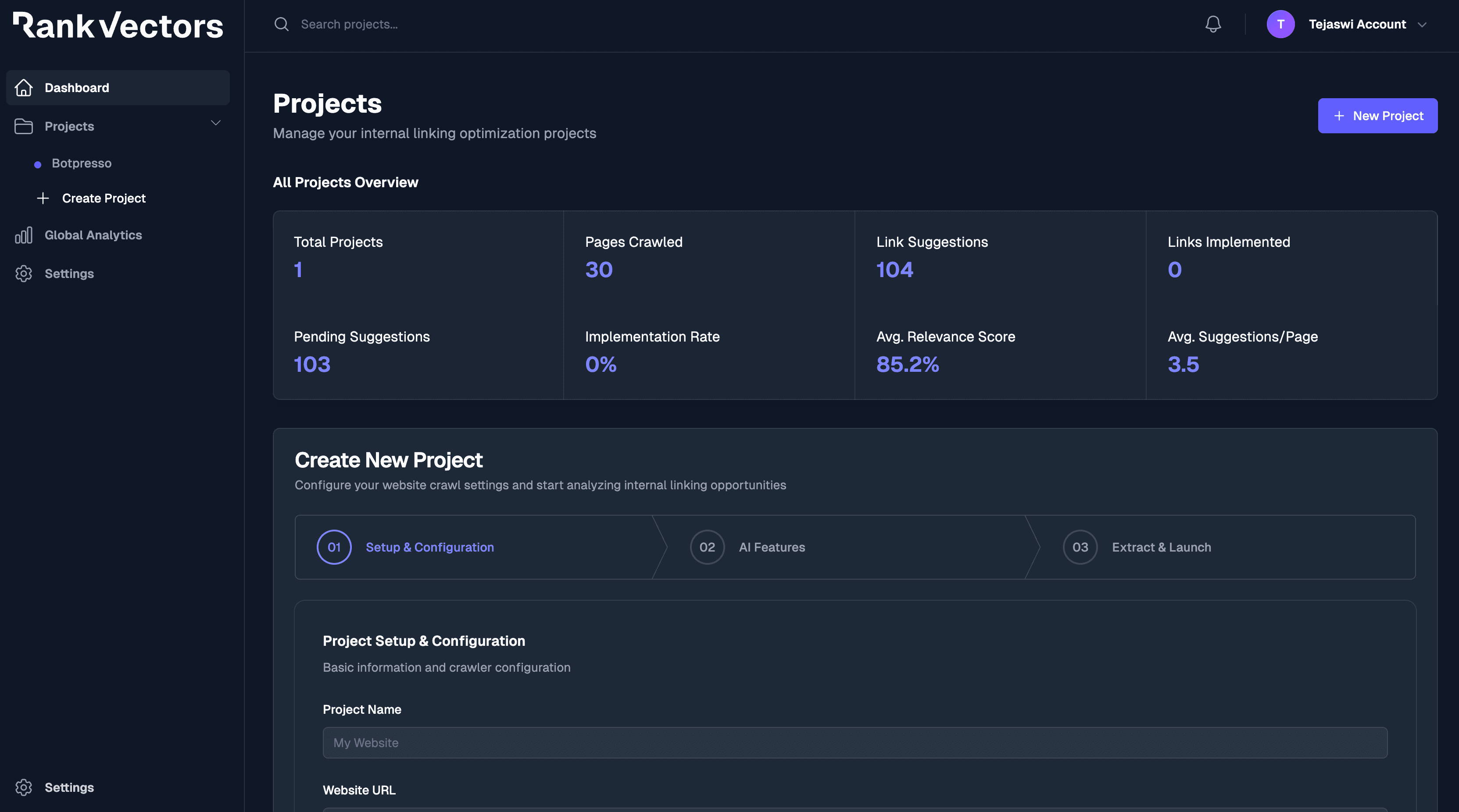

AI-powered internal linking, from sync to implementation

Configure, sync, analyze, and implement optimized internal links at scale. RankVectors automates the heavy lifting while giving you full control.

Results at a glance

AI-powered syncing, analysis, and implementation—delivering measurable internal linking impact.

- Internal links added

- 4,000,000+

- Pages synced

- 1,000,000+

- Avg. relevance score

- 94%

- Link suggestions generated

- 5,000,000+

Deploy faster

Everything you need to optimize internal links

Configure, sync, analyze, and implement—RankVectors streamlines each step so you can ship impactful internal linking updates confidently.

- Sync engine

Fast, resilient content syncing with smart retries and queueing. Capture metadata, headings, links, and status codes optimized for analysis.

- Analysis

AI-driven internal linking recommendations using embeddings, relevance, and authority flow—backed by analytics and version history.

- Implementation

Roll out recommended links safely with SDKs and CMS integrations, preview changes, and track impact with analytics and versioning.

Adaptive Syncing

RankVectors Sync Engine

Sync large sites quickly and safely. Respect robots rules, throttle traffic, and precisely control scope with sitemaps, depth limits, and include/exclude patterns. Extract clean content, metadata, and link graphs for high-quality analysis.

- Configurable & respectful.

- Custom user-agent, robots-aware behavior, sync-rate controls, depth settings, and domain/path filters.

- Resilient at scale.

- Queueing and retries to handle transient errors and large sites without timeouts.

- Rich extraction.

- Content, headings, canonical URLs, status codes, and links captured for downstream AI analysis.

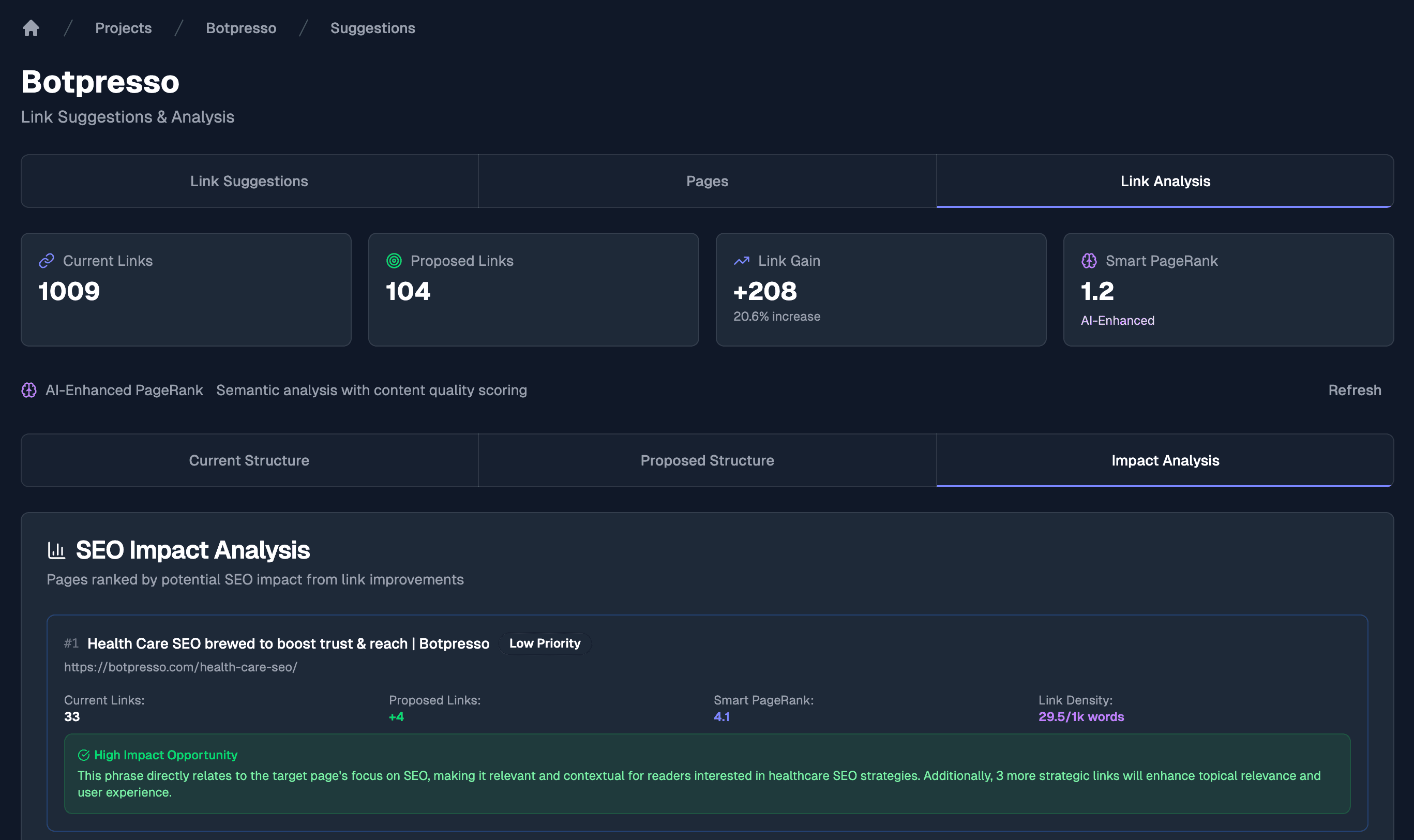

AI Analysis

Semantic relevance, not just keywords

RankVectors analyzes content using vector embeddings to identify the most relevant internal linking opportunities. Get contextual anchor text, authority-aware link paths, and transparent scoring to prioritize impact.

- Contextual match scoring.

- Embeddings-driven similarity captures meaning and intent to surface high-quality link pairs.

- Quality and safety checks.

- De-duplication, canonical awareness, noindex/nofollow respect, and conflict avoidance for clean suggestions.

- Analytics-ready output.

- Rich metadata, relevance scores, and rationales so you can review, sort, and approve with confidence.

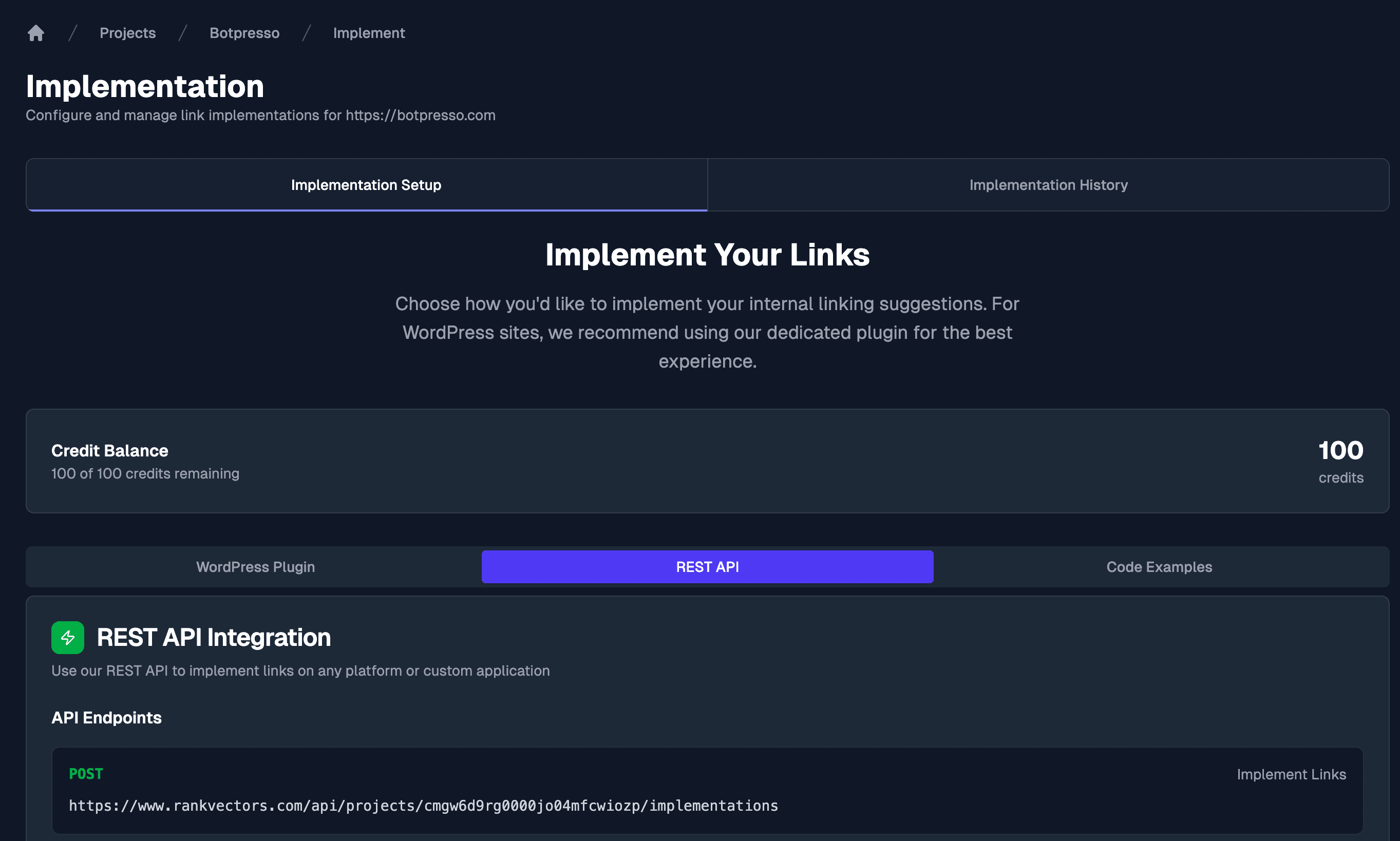

Implementation

From suggestion to live links

Roll out approved internal links safely via SDKs and API. Preview diffs, stage changes, and track impact with analytics and versioning—all with guardrails that respect your content rules.

- Safe by default.

- Review flows, previews, and rollback support ensure confidence before and after deployment.

- Flexible delivery.

- Implement via REST API, SDKs, or export for manual workflows—works with your CMS.

- Measurable impact.

- Versioned changes with analytics tie implementation to outcomes you can trust.

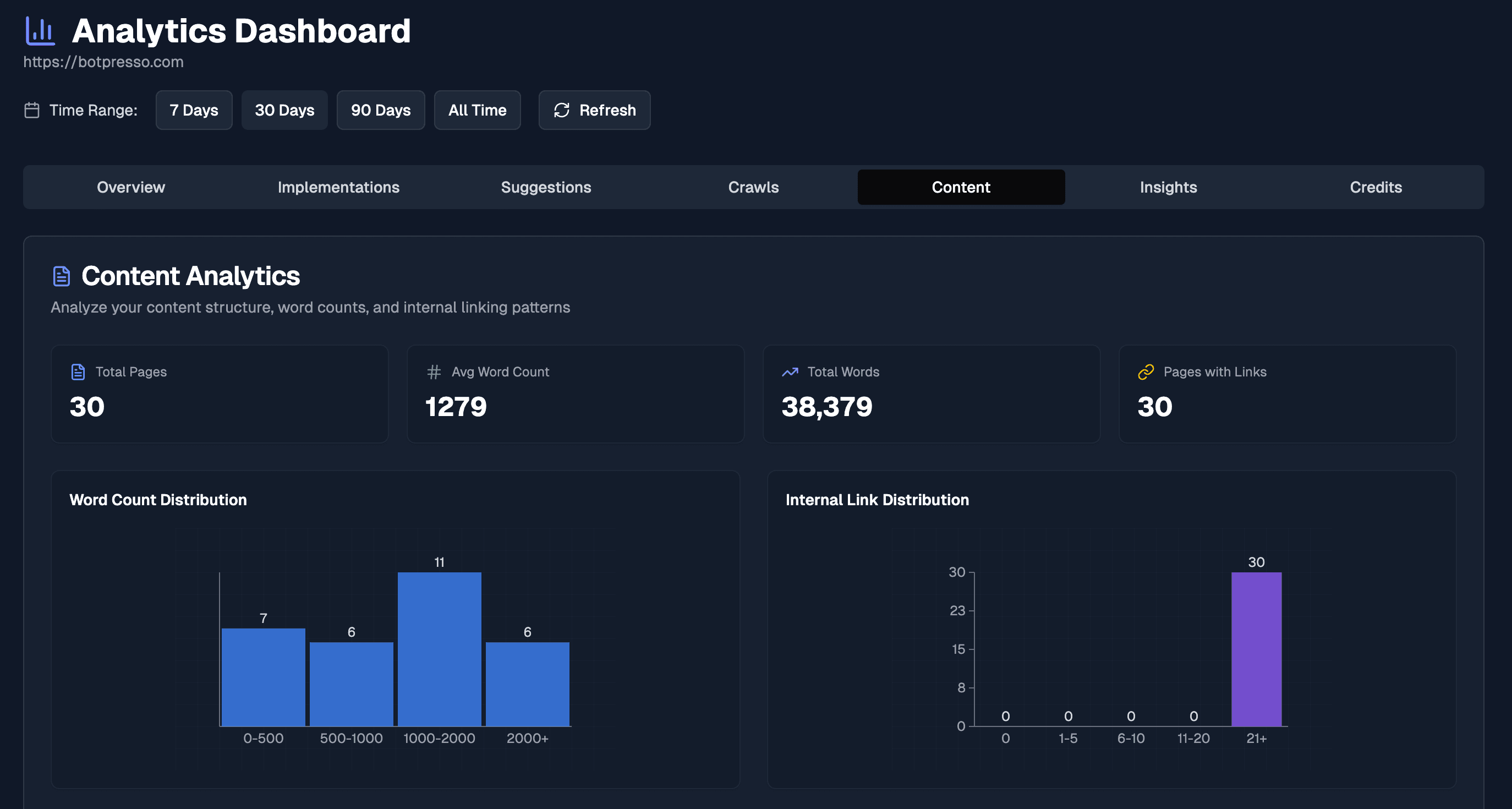

Analytics

Measure impact with clarity

Track sync health, link suggestions, and implementation outcomes in one place. See relevance trends, coverage, and authority flow—then tie improvements back to specific changes.

- Relevance & coverage.

- Monitor suggestion relevance, anchor diversity, and page coverage to prioritize high-impact changes.

- Quality & safeguards.

- Detect conflicts, noindex/nofollow issues, and canonical mismatches before they ship.

- Queues & processing.

- Understand sync throughput, retries, and queue health for reliable operations.

- Advanced insights.

- Authority flow signals and link graph stats to guide internal linking strategy.

- Powerful API.

- Export analytics and slice by project, sync, or implementation version via API.

- Audit-ready history.

- Versioned changes with before/after metrics for clear attribution and rollbacks.

Frequently asked questions

- What is RankVectors?

RankVectors is an AI-powered platform for optimizing internal links. It syncs your site, analyzes content semantically, and recommends/implements high-quality internal links.

- How does the AI analysis work?

We use vector embeddings to understand meaning and context, not just keywords. This powers relevance scoring, contextual anchors, and authority-aware link paths.

- Can I control what gets synced?

Yes. Configure sitemaps, depth limits, include/exclude patterns, custom user-agent, and rate limits. Robots rules are respected by default.

- How are suggestions implemented?

Review and approve changes, then ship via SDKs or REST API. You can stage previews, batch deploy, and roll back if needed.

- Is my data secure?

We use project-level isolation and never mix customer data. Canonical/noindex/nofollow rules are respected. You can delete projects and their data at any time.

- How big of a site can I use RankVectors on?

We support large sites with resilient queuing and retries. Use filters to focus on high-impact sections; contact us for enterprise-scale guidance.

Boost your productivity today

Use AI to surface high-impact internal links, resolve content gaps, and improve sync efficiency—so every page gets discovered and contributes to rankings.