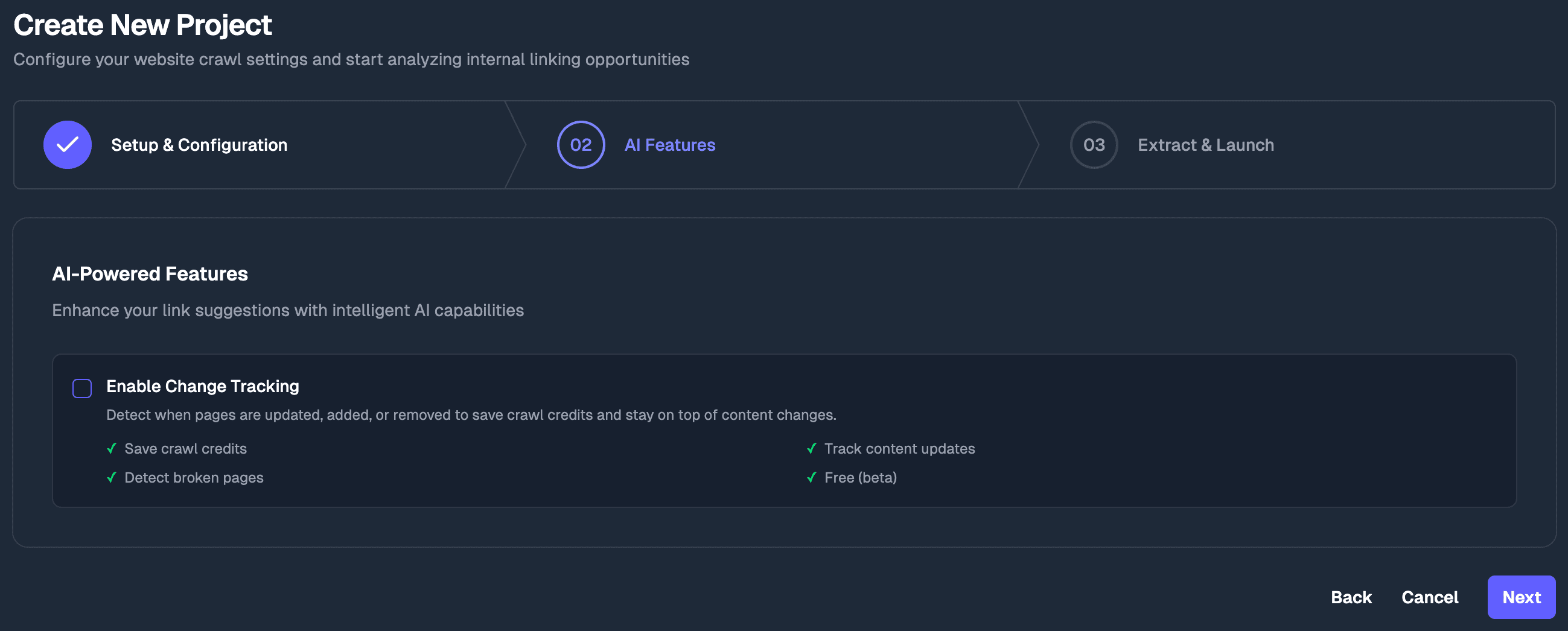

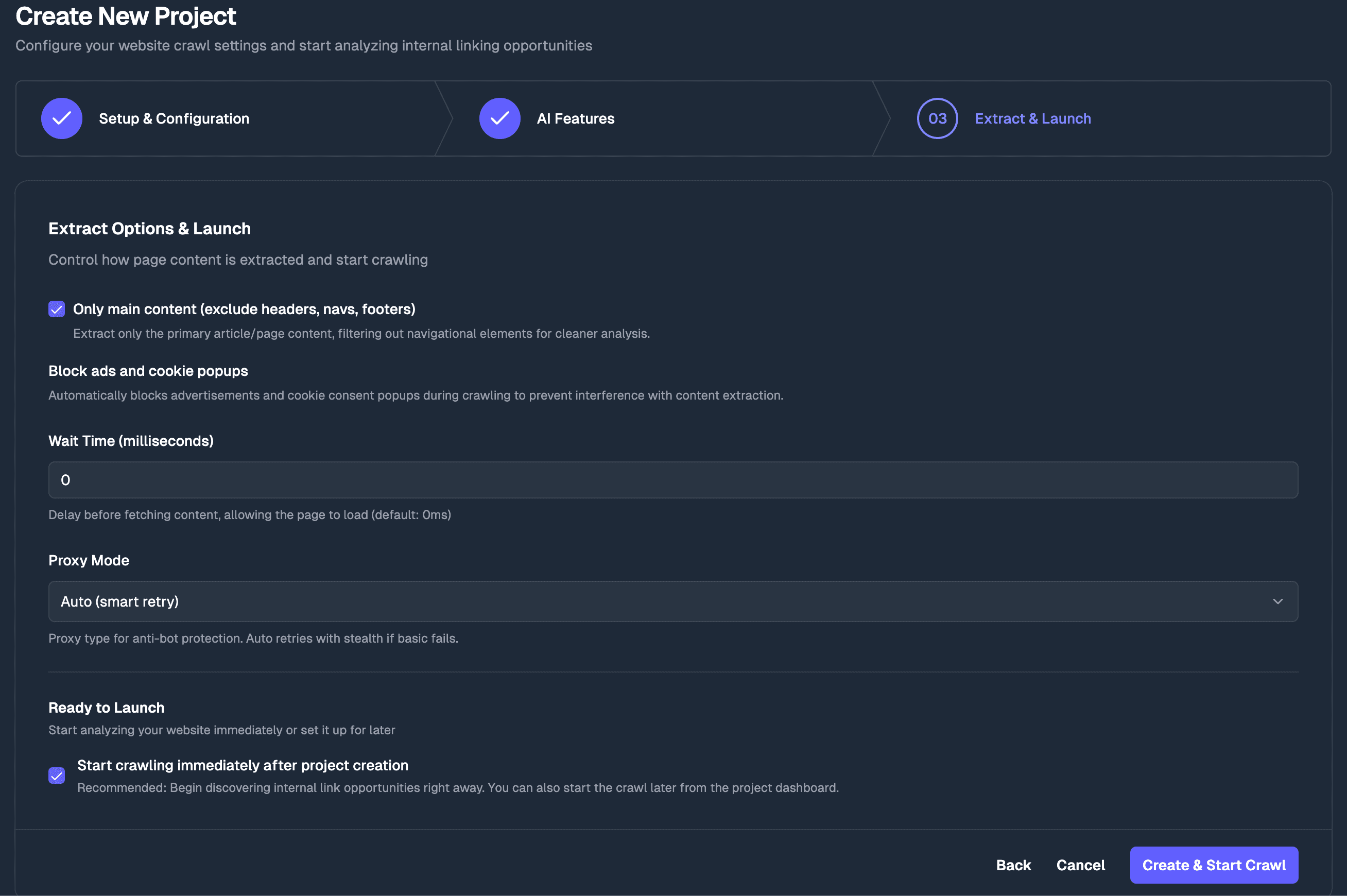

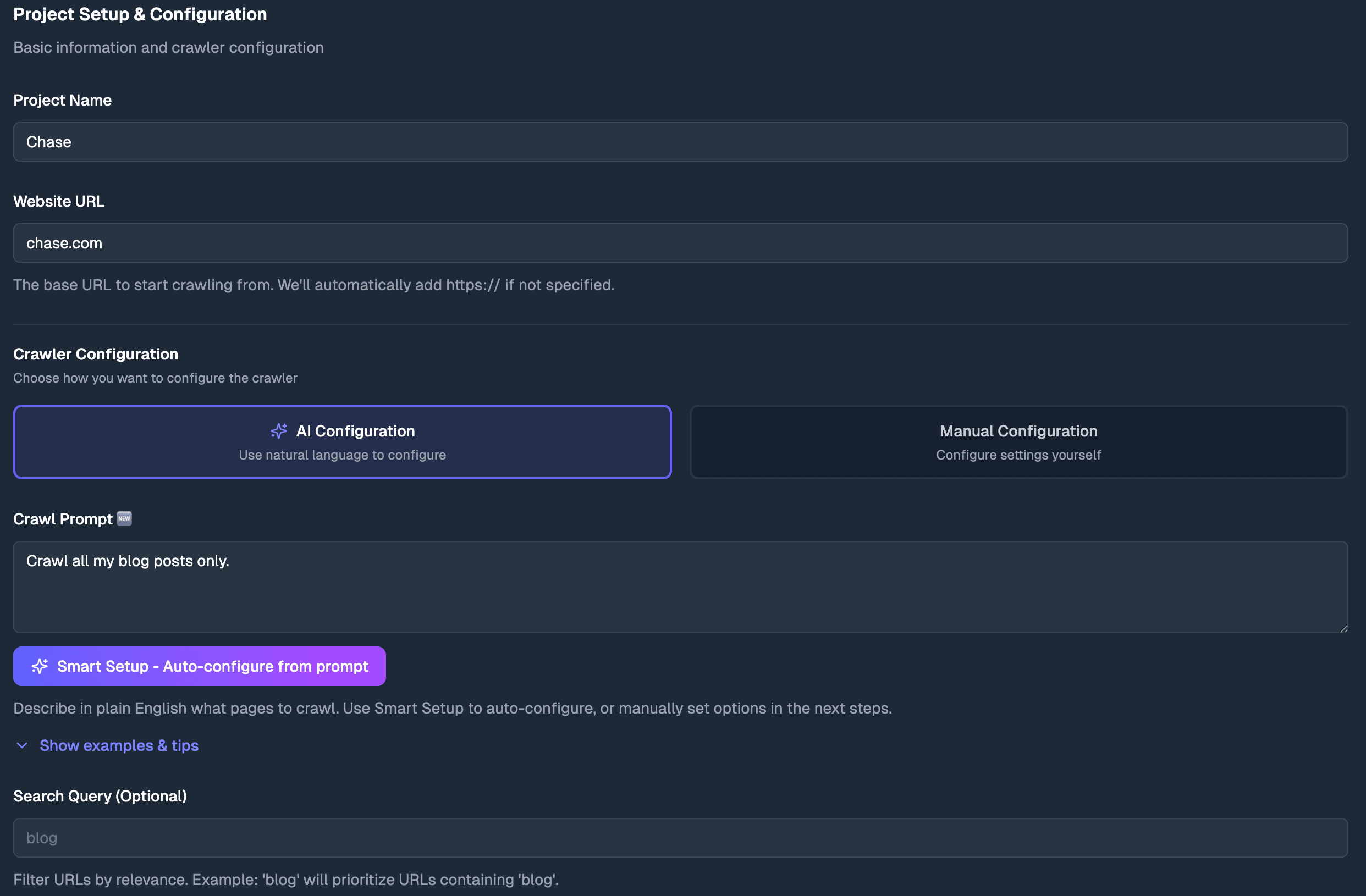

Smart Crawling That Understands Your Content

AI-powered website crawling with natural language instructions, JavaScript rendering for SPAs, and intelligent content extraction. Crawl React, Vue, Angular sites with zero configuration—just tell us what matters.

Smart Crawling

Everything you need to analyze your website

Our intelligent crawling system automatically discovers, extracts, and analyzes content from every page on your website, giving you complete visibility into your internal linking opportunities.